To Sam Altman, Dario Amodei, Demis Hassabis, and all leaders shaping the future of artificial intelligence,

As you guide humanity into an era increasingly defined by AI-powered language models and intelligent interfaces, we respectfully offer a proposal that could serve the long-term interests of both the AI ecosystem and the open web: the creation and adoption of a public, standardized architecture that allows businesses to deterministically deliver structured, query-responsive product listings to AI systems.

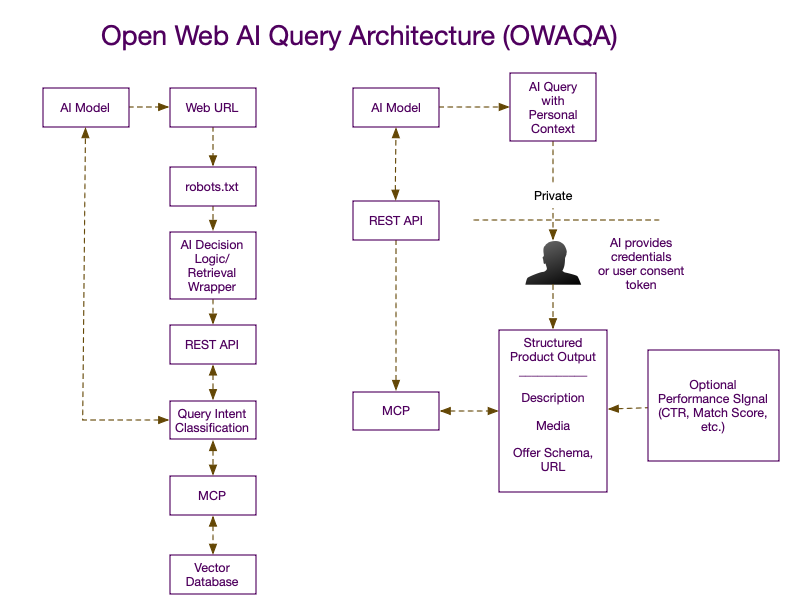

We propose an Open Web AI Query Architecture (OWAQA)—a set of conventions and protocols that empower e-commerce businesses to serve highly relevant, structured product content to AI systems, enabling those systems to provide more contextually accurate, user-aligned recommendations, while giving businesses the control necessary to optimize product-market fit.

robots.txt convention to include a reference to a llm.txt file.llm.txt acts as a publicly accessible manifest that includes a human-readable and machine-readable summary of the company’s site, product taxonomy, and preferred query routes.

AI systems are inherently probabilistic. But businesses operate on determinism: they require accuracy, brand consistency, and control. They need to know:

This proposed architecture bridges the gap. It offloads the responsibility of granular content optimization to businesses—the parties best positioned to align product offerings to nuanced user needs. It gives AI systems a structured, interpretable, and optimized content pipeline. It gives marketers a coliseum to compete in—a framework where better messaging, better product-market alignment, and better user fit are rewarded.

In traditional web SEO and SEM, a business might maintain one or two variants of a product listing. But AI-driven interfaces change the game: a single product may resonate differently with a commuter, a college student, and a retiree. This architecture allows businesses to:

This model delivers more value to end users. The AI presents more relevant content. The business captures more conversions. Everyone wins.

This system also enables:

This architecture is not science fiction. All its components—REST APIs, vector databases, schema.org, manifest files—exist today. What we need is consensus, adoption, and encouragement from the major AI players:

llm.txt and REST discovery standards.We urge you to collaborate with the open web community and e-commerce ecosystem to create a future where AI models interact with structured, high-quality, business-optimized content in a transparent, accountable, and mutually beneficial way.

The future is not closed. It is collaborative.

Signed,

Matt Brutsché - 500 Rockets Marketing Think Tank & Digital Agency